Reg_lambda=1, scale_pos_weight=1, seed=None, silent=None,

Objective="multi:softprob", random_state=0, reg_alpha=0, N_estimators=100, n_jobs=1, nthread=None, Max_delta_step=0, max_depth=3, min_child_weight=1, missing=None, for example if you want to install the first one on the list mndrake/xgboost (FOR WINDOWS-64bits): conda install -c mndrake xgboost If you’re in a Unix system you can choose any other package with linux-64 on the right. Sns.regplot(expected_y, predicted_y, fit_reg=True, scatter_kws=)Īs an output we get: XGBClassifier(base_score=0.5, booster="gbtree", colsample_bylevel=1,Ĭolsample_bynode=1, colsample_bytree=1, gamma=0, learning_rate=0.1, Print(an_squared_log_error(expected_y, predicted_y)) Print(metrics.r2_score(expected_y, predicted_y)) The first step is to install the XGBoost library if it is not already installed. python-package/ python setup.py install 3. XGBoost is an important library for DLI training.

HOW TO INSTALL XGBOOST PACKAGE IN PYTHON HOW TO

Here we have printed r2 score and mean squared log error for the Regressor. XGBoost can be installed as a standalone library and an XGBoost model can be developed using the scikit-learn API. How to install the latest version of XGBoost IBM Support How to install XGBoost for WMLA. Here, we are using XGBRegressor as a Machine Learning model to fit the data. Here we have used datasets to load the inbuilt boston dataset and we have created objects X and y to store the data and the target value respectively. Create a quick and dirty classification model using XGBoost and its default parameters. Print(nfusion_matrix(expected_y, predicted_y)) Once you have the CUDA toolkit installed (Ubuntu user’s can follow this guide ), you then need to install XGBoost with CUDA support (I think this worked out of the box on my machine). Print(metrics.classification_report(expected_y, predicted_y))

Here we have printed classification report and confusion matrix for the classifier. import pip pip.main ( install,(Optionally) Install additional packages for data visualization support. Now we have predicted the output by passing X_test and also stored real target in expected_y. To install the Python package: Choose an installation method: pip install. Here, we are using XGBClassifier as a Machine Learning model to fit the data. Unique features include tree penalization. XGBoost is an open-source in Python and other data science platforms for gradient boosting. X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.25) The power of Python is in the packages that are available either through the pip or conda package managers. XGBoost provides a parallel tree boosting (also known as GBDT, GBM) that solve many data science problems in a fast and accurate way. It implements machine learning algorithms under the Gradient Boosting framework. Here we have used datasets to load the inbuilt wine dataset and we have created objects X and y to store the data and the target value respectively. XGBoost is an optimized distributed gradient boosting library designed to be highly efficient, flexible and portable.

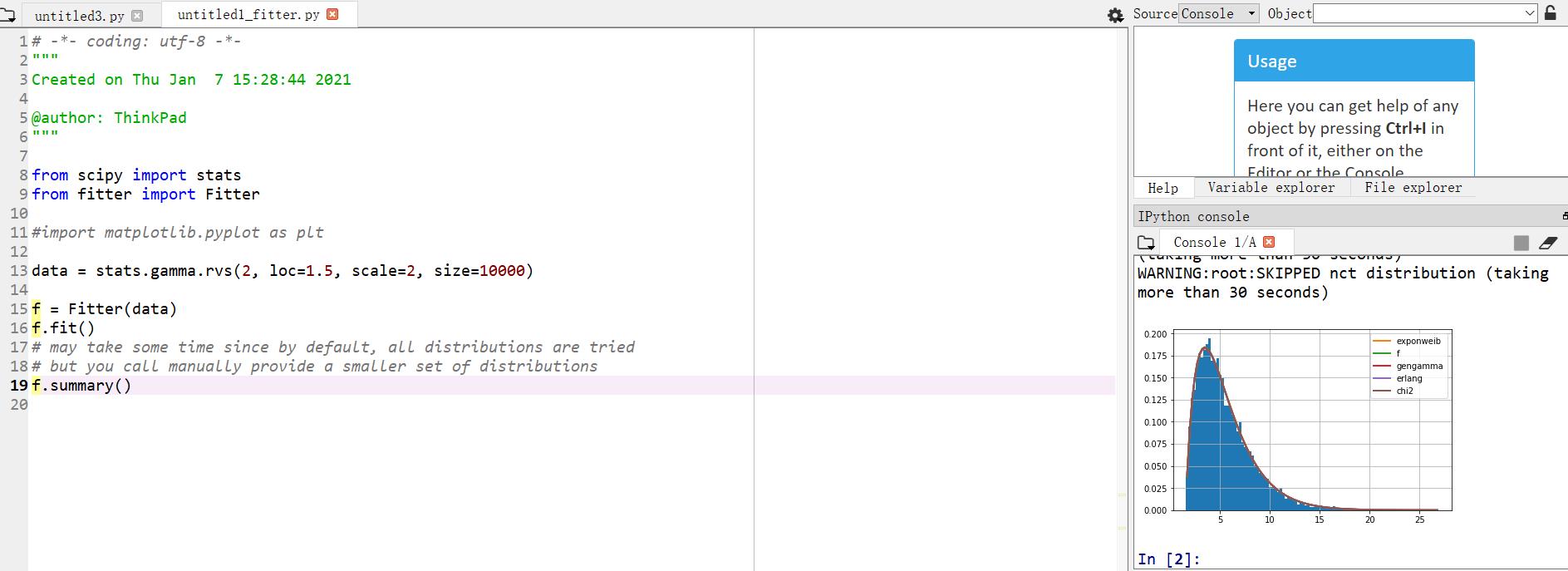

HOW TO INSTALL XGBOOST PACKAGE IN PYTHON CODE

We will understand the use of these later while using it in the in the code snipet.įor now just have a look on these imports. Here we have imported various modules like datasets, xgb and test_train_split from differnt libraries. From sklearn.model_selection import train_test_split

0 kommentar(er)

0 kommentar(er)